As a start, I’d like to talk a bit about dithering.

When I found out that Urban Runner’s VMD use the Indeo 3 codec for video frame data, I was a bit crushed: The resulting frames are in true color (in the YUV colorspace) and ScummVM doesn’t (yet?) support a true color mode for game graphics — only paletted 8-bit colors.

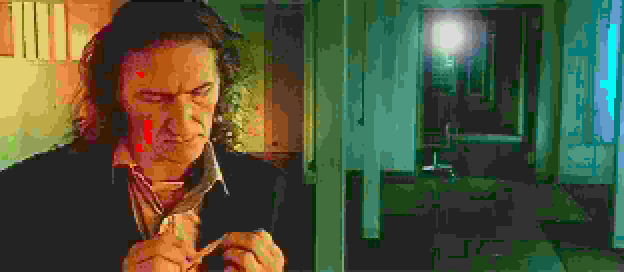

So at first, to test the Indeo 3 decoder (copied from the great FFmpeg project), I just wrote the frame to files. The results looked, after fiddling a bit, like that:

|

Note that the Indeo 3 frames are originally in YUV410, i.e. only the luminance (Y) channel is in full resolution while the two chrominance (U and V) channels are halved horizontally and vertically. Also, many frames are then again down-scaled, to save space.

To get it displayed in ScummVM, my first idea was (ab)using ScummVM’s overlay (which does support 16-bit graphics) for video playing, but that got shot down rather quickly in the IRC channel and I didn’t really like it either anyway.

Therefore, I looked at what the original did when Windows ran in 256 color mode and saw, that it dithered the images using an ordered dithering algorithm. I read a bit about dithering in the Wikipedia and found that an error distribution algorithm would probably look far better. An error distribution dithering algorithm distributes the errors (the difference between the color wanted and the color that’s in the palette) on to the surrounding pixels.

One problem: Dithering, no matter which exact algorithm, depends on finding the closed matching color in the palette. A quickly coded linear search in the palette proved to be far too slow for video playback, though. A binary search tree, my next idea, failed because there’s no meaningful ordering on color values.

After googling a bit, I stumbled upon an octree, a tree with eight children on each node. The basic concept is that you take the upper-most bit of each color component, construct an integer out of these and use that integer as an index in the list of children. Then you continue with the next bits and put the palette index in the final leaf. When looking up, you just do the same with the color you look for and find the index in the leaf. The results were this:

|

Still not all that great, but I thought it was because the palette must be still messed up (since the surrounding image was also still a bit wrong).

Then I implemented the dithering, Floyd-Steinberg namely:

|

However, a short time later, I found the distribution matrix for the Sierra-2-4A (a.k.a. “Filter Light”) algorith, which looks very similar to Floyd-Steinberg but is faster — it only distributes the error on 4 instead of 5 pixels and all fractions are achievable by shifting. The result looked liked this:

|

The very noticeable blobs I thought, as I already said, to be the effect of a broken palette, or of my dithering. However, I couldn’t find anything wrong with the palette loading and dithering code. Thankfully, eriktorbjorn told me that after changing the octree-lookup to a linear search, the video did look correct (albeit far too slow). When finally tried that as well, I found that he was right. So I fiddled a bit with the octree, then thought about how an octree actually works. I came to the conclusion that it wouldn’t work correctly for finding a nearest matching color, at least not how I understood and implemented it (if anybody can prove me wrong there, please do).

Hence, I swapped the octree with the only other structure I knew would work for such a task: a lookup table. Using the full 3*8bit color component, leading to a 16MB table, was obviously too much, so I compromised on using only the 6 most significant bits of each color component, like VGA does anyway.

The plain nearest matching color with the lookup table:

|

With Floyd-Steinberg dithering:

|

With Sierra-Light dithering:

|

One issue remained: While the palette has a complexity of O(1) in the lookup, building lies in O(n^4) (or O(n * m^3) if you reduce the color depth), which takes even on my 3GHz desktop noticeable time. Fortunately, _sev reminded me that the completely built table could be cached onto disk. Now the table can be loaded quickly after having been built only once.

In closing, it’s now quite okay. Still, if somebody knows of a better way to find the nearest matching color in a fixed palette, please let me know.

Well it seems to me, that Voronoi diagrams could be useful here. There is Bowyer-Watson algorithm to do it for dimension spaces larger than 2.

Hmm, as far as I understand, Voronoi diagrams can be used to find the nearest neighbours fixed points have between them. But what I need is the nearest neighbour of the complete colorspace (256*256*256 points) to 256 points, not to each other.

Voronoi diagram divides space into fixed number of mutualy exclusive parts, with single point in the center (which is one color from a fixed palette), so all points in these part of colorspace are closer to the central point than to others. So let’s say we have colorspace divided into regions according to fixed palette, and we have color c, that we want to map to this palette. All we have to do is checking to which region point c belongs.

Now the question is, if checking this is faster than calculating distances of point c to all colors from the palette and finding the closest one. I think, that these diagrams can be used to construct the lookup table at least.

I have to say, that I didn’t use this method for finding nearest color in the palette, but for totally different things, but when I was reading your text, it came to me that these diagrams may be usefull. I’m sure they can be (and are) used for finding closest match, but I don’t know if they are faster than other methods you tried.

Ah, okay… I tried something similar with letting the color point iteratively “grow” into cubes. Didn’t look that great and actually took way longer than just going through the colorspace in order.

But if you say that there are better algorithms for generating Voronoi diagram, I’ll look into it, thanks. 🙂

You might also look at this discussion: http://objectmix.com/graphics/133036-nearest-colour-thanks-palette-help.html

And there’s one more idea: why using fixed palette for all movies? Maybe it would be better to provide optimized palette for each movie?

Well, in Urban Runner’s case, I do actually need that one fixed palette (which is provided by the game files). The scripts rely on specific colors being at specific indices, for the semi-transparency of the menu dialog boxes, for example.

Oh, I see. I don’t know the game and from screenshots I thought the movies are played fullscreen without any GUI elements.

No, these aren’t actual screenshots, only dumped video frames.

In the real game, you have a border image, static images (often frames out of videos), menu elements, small videos that show what your “enemies” are doing right now, etc. all together.

Well, I must say I got involved into this topic. Probably because I played with graphical algorithms a long time ago. Have you thought about interpolating the chrominance signal? Maybe it would make those ugly blocks less visible?

It could look like this: http://rapidshare.com/files/182066185/urban_50_true.png.html

Yes, already thought about doing something like that, especially since the blocks aren’t that visible in the original (though I don’t know if that’s rather a bug in the Indeo3 decoder).

Might be, because if both resolution dimensions are halved, then blocks should be 2×2 pixels, instead of 4×4, which is the case in examples you attached.

Well, and the video data is actually in YUV410, i.e. the chroma resolutions are halfed again…

Could you please send me the palette that is used in the game? I’d like to play with it a little.

This is the raw RGB VGA (i.e. only 6 bit per component are used) palette the scripts specify:

http://drmccoy.de/zeugs/RGB.pal

The same palette converted to YUV, as then used by the engine:

http://drmccoy.de/zeugs/YUV.pal

I thought about another lookup table. I you divide the colorspace into 16x16x16 cubes, then for this palette, the largest number of colors in one cube is 9 and on average is is 2,8. So it is possible to make lookup array with 4 most significant bits of color components as indexes, which has 4096 elements. Each element is not a single palette index, but array or linked list of all palette colors in this cube. These few indexes can be checked by simple linear search.

After that I came up with another idea – maybe octree is not that bad. The octree is made by dividing colorspace into smaller and smaller cubes. The goal is to build the tree so, that each leaf is largest possible cube with only one color in it.

The algorithm of creating such tree would be something like this:

(we start from the root node – which is the whole colorspace cube)

1. If the cube associated with current node contains pixels with only one nearest color – then it is a leaf and should contain index to a palette.

2. Otherwise we divide it into 8 smaller cubes with halved dimmensions and add 8 children to current node and repeat algorithm for each node.

The question is how we can check if the given cube contains only one color? I’m not sure, but I suppose it is enough to check vertexes (is it correct plural form?) of the cube – if they have the same nearest color, then the whole cube must be one-color.

And one more idea – it is possible, that such tree may be too big (because voronoi cells’ faces are not parallel to cubes faces and we have to go down to the cube size equal to 1 to totally separate all colors). The idea is to stop building the tree if we have not more than 2 colors in a cube and store this 2 colors as an array.

Just one more thing – I have a proof that if 8 vertexes of the cube have the same color, then the whole cube has only one color. This is because Voronoi cells are always a CONVEX set. So if all vertexes of the cube are within Voronoi’s cell, then the whole cube must be also contained.